The project focused on the challenges that broadcast media face, particularly in interactivity and live broadcast. New tools and technologies - such as ubiquitous networked mobile video devices; tracked and instrumented cameras; software for capturing, encoding and streaming - offer new possibilities.

Introduction

Blast Theory, the Mixed Reality Lab at the University of Nottingham and Somethin’ Else conducted an investigation into a range of ubiquitous technologies such as mobile phone cameras, webcams and camcorders. The project looked at how these might be integrated into an accessible platform for online video broadcasting that could offer a low cost, light weight interactive alternative to the existing models of outside broadcasting used in television.

The project resulted in the design of a streaming platform using camcorders and 3G netbooks, the development of mobile phone software to stream location data alongside streaming video and a lightweight video and data server built on top of open source software.

The partners also collaborated to design potential applications for the platform; looking at ideas from crowd sourced video coverage of large scale events through to location based games using video.

Public Demonstrator

The project led to the design of a public demonstrator staged in May 2010: a mixed reality game called SNAP.

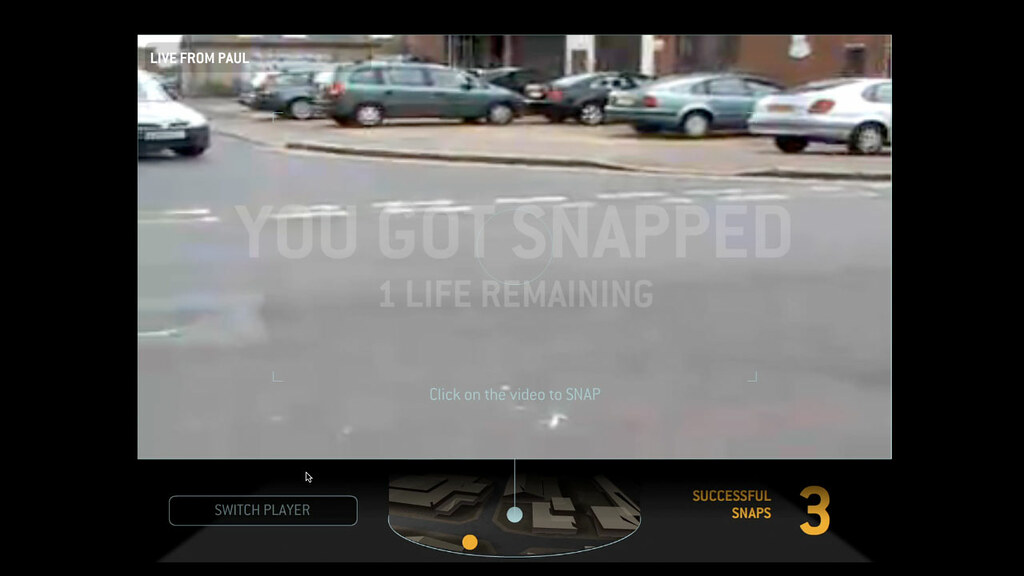

SNAP is a game for players online and on the street. Three players equipped with mobile cameras that are streaming live video and audio run through the city while their location is tracked via GPS. Each player attempts to film the others without being filmed themselves. The goal is for street players to stalk and film one another around the city, directed by online players who earn points for capturing snapshots of street players from their video feeds.

Online players can view a virtual model of the city and see the positions of the three street players. They can choose one of the street players and follow their video stream. If they see one of the other players onscreen they can click to capture a “Snap”. The online player who captures the most snaps is the winner.

When a snap is taken of a street player, any online player following that street player’s stream loses one of their three lives.

Platform

The platform takes a novel approach to encoding, streaming and presenting live video from mobile devices alongside live contextual data such as camera position, orientation and game data. The platform provides a prototype for a number of innovative technologies:

- A 3D Flash based interface for navigating multiple live video streams

- A mechanism for streaming and storing live video with embedded contextual data, such as location, user and game data

- A game engine and spatial database for running spatially driven logic on data from multiple mobile devices

- A potential architecture for live encoding and streaming over RTP from mobile phones and transcoding for multiple clients including Flash, HTML5 compliant browsers and mobile phones

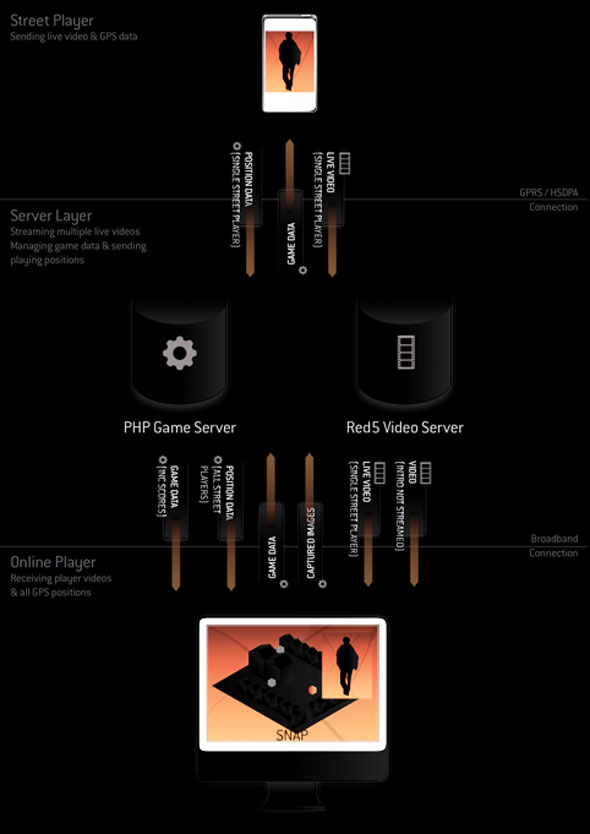

It comprises three parts:

- An online interface which displays live video, game and 3D location data

- A video and game server

- A mobile platform which shoots, encodes and streams live video and location data

Online Interface

The online interface has been designed to connect players to the events taking place live on the streets. As the player loads the game, the interface plays a introduction movie, while in the background it loads video streams and location data for street players.

Street players’ positions are loaded into a 3D view of the city allowing online players to switch between the video streams of different street players.

When an online player ‘Snaps’ a Street Player in the live video, the interface grabs the data from the video stream and creates a JPEG image, which is uploaded to the Game Server, and kept to be shown back to the players when their game is finished.

The interface uses Adobe Flash and PaperVision3D, an open source 3D library. The models are created in standard 3D design software and exported to the DAE open source format.

Video and game server

The server uses a cloud-based installation of Red5 to provide a scalable platform for video streaming alongside an event driven game engine and spatial database.

Red5 is an open source video server that is designed to encode video for playback in Adobe Flash.

Street Player

The street player carries a digital camcorder and small solid state netbook to encode and stream video over 3G, alongside an Android mobile phone to gather and stream location data.

Research

The key aims of the project were:

- To develop a system utilising and building upon existing technologies that are commercially available, reliable and low cost

- To provide a facility for audience interaction with live events and thus open up new markets in streamed video

- To capitalise and exploit the outcomes of the ongoing research relationship partnership between artists, scientists and industry

Partner contributions

The Mixed Reality Lab provided the core programming and software resources for the project, undertaking development in:

- A prototype telemetry data server

- Streaming video from mobile devices

- Streaming telemetry data from mobile devices

- Using Google Earth to produce mobile camera planning utilities

Somethin’ Else took a key role in sharing information about industry standard equipment, practices and techniques; contributing to design discussions and giving guidance in format development; filming of the public demonstrator and potential avenues for future exploitation.

Blast Theory led production and creative development – setting goals for research, undertaking creative discussions, hosting design and software workshops with the team and designing the final SNAP game including the Flash interface.

Activities

May – June 2009

The project was launched with a kick-off meeting in London at the offices of broadcast partner, Somethin’ Else. Bringing together the whole creative and software teams for the first time it allowed Blast Theory to discuss the opportunities of the project, agree roles and teams and draft a work plan for the year.

This was followed by a technical launch to define key areas for research and agree a number of discreet, simple prototypes for the production and software teams to build over the following 3 months.

June – September 2009

Following the technical launch the team undertook work on 7 prototypes. These ranged from comparative performance tests using a range of different cameras and streaming infrastructures through to creative development of user scenarios and web interfaces.

At the end of this period Blast Theory hosted a 2-day in-house demonstration, running a platform which integrated:

- Live video and audio streaming from:

- Mobile phones

- Handheld video cameras connected over 3G

- Remote controlled and unmanned cameras

- Telemetry data from mobile phones

- Web interfaces for showing multiple streams and live camera locations in Google Earth

The results of each of the prototypes were reviewed and the whole team were invited to discuss which areas offered the most potential for development for a public demonstrator in 2010.

October – December 2009

Over this time, the creative team undertook discussions and practical tests to develop performance ideas using learning and technology from the in-house demonstrator. This generated a number of potential interactive, broadcast and game formats. Three formats were given preliminary design treatments: a real time drama, a flash mob/documentary and a chase game. From these, the chase game was chosen for further development for the public demonstrator in 2010.

The software team’s focus for this period was designing a flexible and scalable final infrastructure for the video streaming platform, re-writing mobile phone software and the software specification for the first platform tests in January 2010.

January – April 2010

The final description of the SNAP game for the public demonstrator was agreed alongside ongoing mobile phone software development. The creative team ran walk-throughs of the game with performers to test different game styles and mechanics and to gather video material for the online interface.

The final phase of work included platform integration, completion of game orchestration tools, reviewing and re-storyboarding the SNAP online player interface and the completion of a 3D model of the game area.

May 2010

Staging of SNAP as a public demonstration of the tools and platform developed during the project.